In previous class we learn what is MLP? but we listen a term called gradient descent today we study gradient descent.

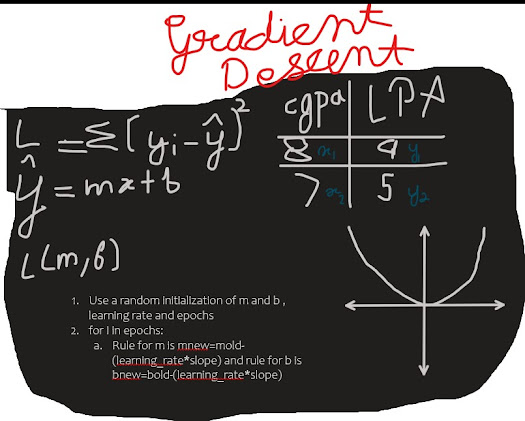

Gradient descent

In mathematics, gradient descent is a first-order iterative optimization algorithm for finding a local minimum of a differentiable function. The idea is to take repeated steps in the opposite direction of the gradient of the function at the current point, because this is the direction of steepest descent.

Here it is explained by image

Comments

Post a Comment

Hi friends write your comments